My research primarily focuses on the following three areas: (1) Augmented and Virtual Reality, (2) Multimodal Interaction, and (3) Tangible User Interfaces. All three of them were employed in different application areas, such as assistance systems for vulnerable road users and car drivers, evaluation environments for cyclists, remote communication between colleagues and family members, children’s education, and locomotion in Virtual Reality environments.

Augmented and Virtual Reality

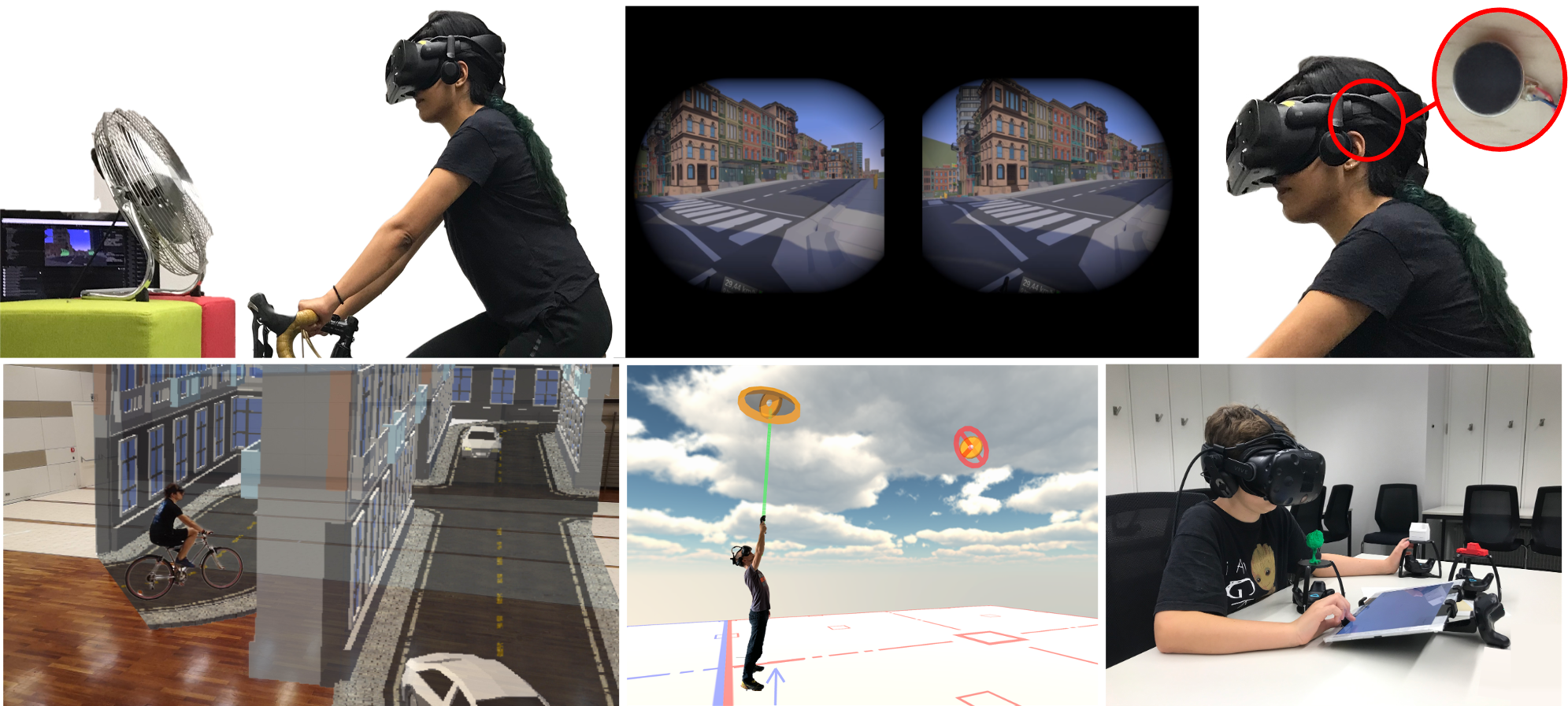

Using augmented and mixed reality interfaces I worked on reducing motion sickness for cyclists in Virtual Reality (VR) bicycle simulators, contributed a new evaluation method for vulnerable road users, e.g., e-Scooter riders and cyclists, using Augmented Reality (AR) simulation, children’s education, and locomotion in Virtual Reality environments. In the project about Virtual Reality bicycle simulators, we looked into bicycle steering methods and external countermeasures and their effect on the motion sickness while cycling in VR. We compared handlebar, HMD, and upper-body steering methods and three countermeasures to reduce VR sickness: (1) airflow, (2) dynamic field-of-view restriction, and (c) two-sided head-mounted vibrotactile feedback (Figure upper row). As for the AR simulation for cyclists, I developed the AR simulation for cyclists, in which they could experience real cycling on a real bicycle while wearing AR glasses in a safe manner (Figure, lower row, left). Additionally, we investigated teleportation methods in virtual 3D spaces that facilitate quick and precise way of finding targets without causing motion sickness. The idea was to enable teleportation to any point in virtual environments in the most efficient way. Figure (lower row, middle) shows a participant’s attempt to teleport to a target above his head. Finally, we looked into ways to introduce children to VR technology by combining touch input and tangible user interfaces, in which they could create virtual worlds without programming (Figure, lower row, right).

Multimodal Interaction

I have primarily applied multimodal user interfaces to design assistance systems for cyclists. During my time in the Safety4Bikes project at the research institute OFFIS, I particularly focused on modular assistance systems for cyclists that recognize impending dangers based on the current traffic situation and point out the correct behavior. In the event of acute dangers in the immediate vicinity or in potentially dangerous situations, the systems issued warnings via acoustic, visual or haptic signals on the helmet or handlebar. I also considered different types of warnings and their suitability for child cyclists. As part of the project, I developed and gradually improved a bicycle simulator in order to carry out studies with children in a setting that is as realistic as possible, but still safe, to test various interaction designs. I also developed different helmet designs to analyze attachment points for sensors and actuators on the helmet. In addition, I worked on projects that aimed to support car drivers inside cars and communication of cars with vulnerable road users. In the former one, I used ambient light for turn-by-turn instructions, where I focused on how we can represent navigation information using ambient light in a car and which light parameters can be used to enable effective and non-distracting driving. In the latter one, I explored external human-machine interfaces to communicate intentions of automated vehicles using light signals. I investigated trust and readiness to cross a street in front of an autonomous car using eye-gaze tracking and a slider participants hold in their hands.

I was also a part of a bigger research project Safety4Bikes and contributed a big part to it, which is presented in the following video:

Tangible User Interfaces

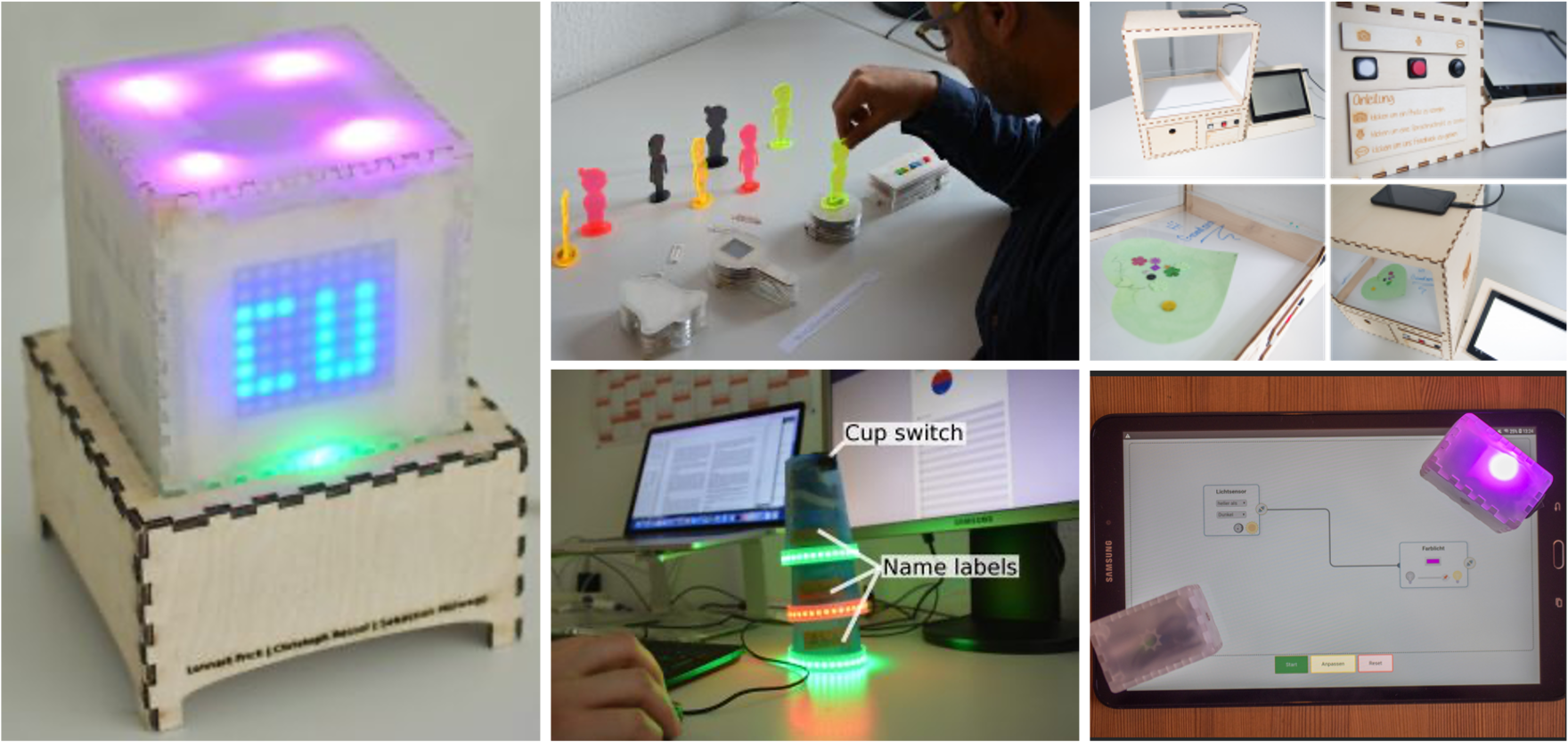

While working on tangible user interfaces, I looked into their capabilities to support remote communication between working colleagues and family members, and children’s education. For this, we explored interactive tangible calendaring systems and metaphor-based interfaces to increase awareness between remote working colleagues. Using CubeLendar (a tangible calendaring shaped as a cube) users could receive an overview of the events, weather, time, and date by turning the cube in their hands. In the follow-up project — AwareKit — users could use touch and rotation as interaction techniques to access different types of information, and employ figures of colleagues to fetch specific calendar information. In another project we explored metaphor-based tangible systems to increase colleagues’ awareness about each other, e.g., via using tin can metaphor. To facilitate the communication between grandparents and grandchildren we created a system, which facilitates an exchange of visual and auditory messages. Family members could place the results of their crafting, writing, or music playing, put in the box, and send it by simply pressing one button. Additionally, we designed a tangible toolkit to facilitate children’s learning of IoT devices. Using this system children could create connections between inputs and outputs on a table and experience an instantaneous result of their effort on the tangible blocks. Figure below provides an overview of these works.